PointNet

A PointNet is a neural network architecture capable of performing semantic segmentation or classification on point clouds while dealing directly with unordered three-dimensional data. While achieving high performance in comparison with prior methods, it is also robust to perturbation and corruption of the input data.

Description

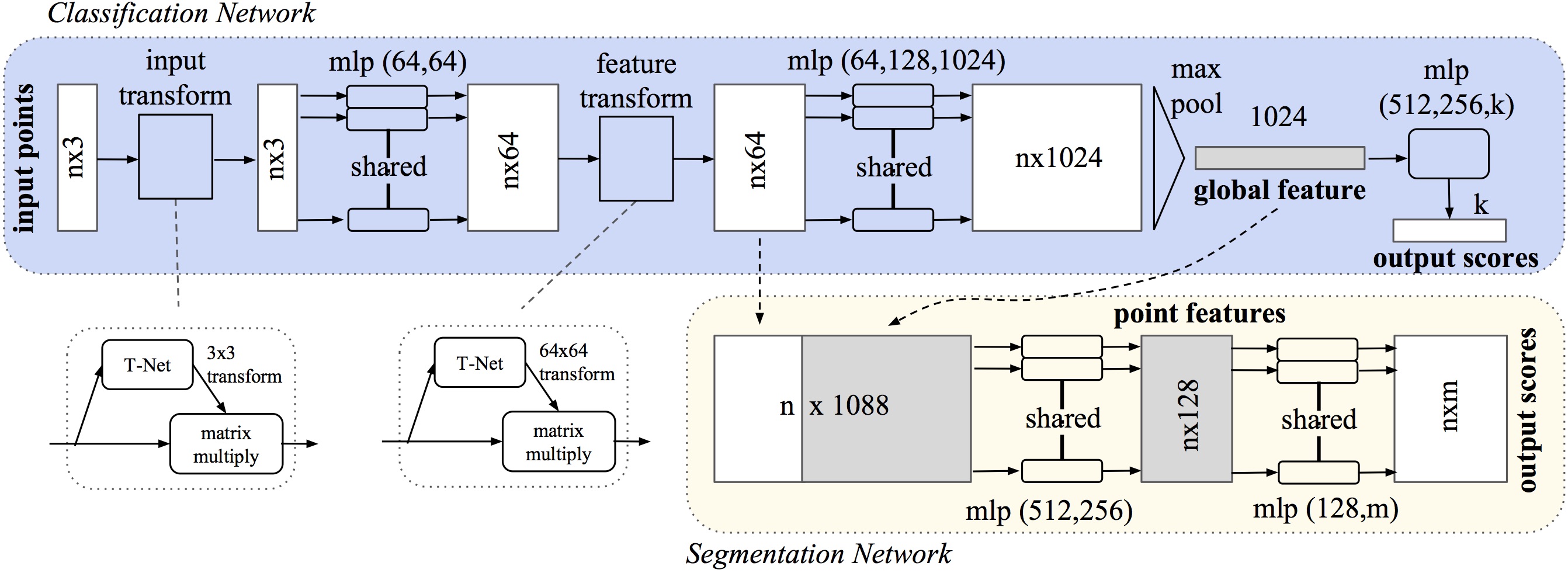

The architecture of PointNet is composed of several modules interconnected together. Both the classification and segmentation architectures share an identical backbone and differ in the last and task-specific module.

What does a T-Net do?

A T-Net is a learnable 3 times 3 matrix that performs a geometric transformation over the input as shown in the blocks named input transform and feature transform.

class TNet(torch.nn.Module):

def __init__(self, dimension: int):

super().__init__()

self.dimension = dimension

self.conv1 = torch.nn.Conv1d(dimension, 64, kernel_size = 1)

self.conv2 = torch.nn.Conv1d(64, 128, kernel_size = 1)

self.conv3 = torch.nn.Conv1d(128, 1024, kernel_size = 1)

self.linear1 = torch.nn.Linear(1024, 512)

self.linear2 = torch.nn.Linear(512, 256)

self.linear3 = torch.nn.Linear(256, dimension * dimension)

self.bn1 = torch.nn.BatchNorm1d(64)

self.bn2 = torch.nn.BatchNorm1d(128)

self.bn3 = torch.nn.BatchNorm1d(1024)

self.bn4 = torch.nn.BatchNorm1d(512)

self.bn5 = torch.nn.BatchNorm1d(256)

def forward(self, points: torch.Tensor) -> torch.Tensor:

batch_size = points.shape[0]

sample_size = points.shape[2]

output = self.bn1(torch.nn.functional.relu(self.conv1(points)))

output = self.bn2(torch.nn.functional.relu(self.conv2(output)))

output = self.bn3(torch.nn.functional.relu(self.conv3(output)))

output = torch.nn.functional.max_pool1d(output, kernel_size = sample_size).view(batch_size, -1)

output = self.bn4(torch.nn.functional.relu(self.linear1(output)))

output = self.bn5(torch.nn.functional.relu(self.linear2(output)))

output = self.linear3(output)

identity = torch.eye(self.dimension, requires_grad = True).repeat(batch_size, 1, 1)

if output.is_cuda:

identity = identity.cuda()

output = output.view(-1, self.dimension, self.dimension) + identity

return output

In addition, this architecture can be interchangeably used for classification and segmentation tasks. In both scenarios, each point is mapped to 1024 features which are then fed to a max pooling layer to compute 1024 global features corresponding to the processed cloud of points.

Later on, in the classification case, the 1024 global features are transformed to class scores.

On the other hand, in the segmentation case, the global feature is repeatedly concatenated to 64 previously extracted point features for each point in the cloud. After some transformations, each point is then mapped to its class scores.

Usage

Instantiating a PointNet to perform semantic segmentation looks very simple as follows.

from deepoints.models.pointnet import PointNetSegmentation

import lightning

# defines a trainer instance

trainer = lightning.Trainer(...)

# instantiates the PointNet model to be trained

model = PointNetSegmentation(

# number of classes of the dataset

n_classes = 10

)

# trains and validates the model on a specific dataset

trainer.fit(model, datamodule = ...)

References

Here's a reference to the original paper.